Aquaai, Aquabyte employ robotics and vision software to help farmers tackle animal health tasks, improve feed efficiency

Alf-Gøran Knutsen, CEO of Norwegian salmon farmer Kvarøy Fiskeoppdrett, has predicted that aquaculture will soon benefit from the arrival of technology that offers improved monitoring of fish size and health, along with infrastructure and other important aspects of production. Kvarøy’s salmon farms located on the Arctic Circle in Norway stand to profit from examples of this new technology slated for deployment this June.

“We are hoping to gain a minimum of 5 percent on feed efficiency. For each percent, it will save us $175,000,” Knutsen said. “Also, we are hoping to gain on being better able to see if there is a problem or disease with the fish.”

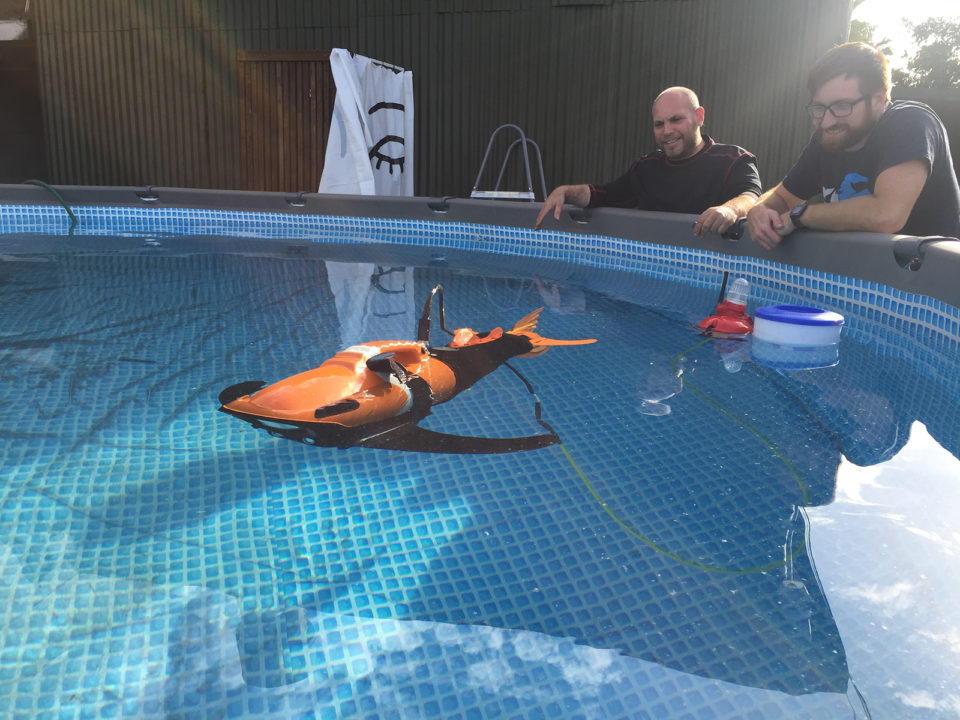

In Kvarøy’s case the new technology comes via robotic fish supplied by San Diego-based startup Aquaai. After prototypes were deployed and tested for two weeks by Kvarøy in extreme weather conditions in November 2017, eight production models of the artificial fish carrying cameras and other sensors will begin swimming beside the real thing in Kvarøy’s pens this summer.

Another example of new technology comes from Aquabyte, which has offices in San Francisco and Bergen, Norway. The startup is currently working with Norwegian researchers and fish farmers to implement camera-based machine-learning techniques to measure the size and weight of fish as well as to count how many sea lice, if any, are present. According to CEO Bryton Shang, Aquabyte will release its first products later this year.

Aquabyte’s software will learn on its own through feedback about how well its algorithms do in evaluating fish, Shang said. So, its ability to evaluate fish will continuously become more efficient and accurate over time.

With machine learning, the software is given training examples and from that learns. For instance, experts would put bounding boxes on images to highlight the location of sea lice. The software then figures out how what’s inside the box is different from what’s outside it. With enough examples, the machine learns how to reliably spot sea lice. Continued feedback, such as from ongoing expert review of a sample of the results of software screening, further improves this ability to detect sea lice.

One thing the two approaches have in common is that neither is focused on the cameras and other sensors that capture images of the fish or measure environmental conditions.

“First and foremost, we are an underwater drone. We’re a platform. We carry any payload,” said Aquaai CEO Liane Thompson. “Most of the sensors are plug-and-play. We’re not inventing sensors. Our patents are in the design and mechanics and the flexible platform itself.”

She added that Aquaai plans to implement artificial intelligence technology that adapts and learns in the future. The units being delivered this year, though, will not have this capability because it is not needed.

Dubbed Nammu for the goddess of the sea, the robotic fish mimics how actual fish swim, according to Simeon Pieterkosky, chief visionary officer and lead designer of the product. That brings benefits.

“We made it with fewer parts than pretty much than anything else,” Pieterkosky said. “We made it possible to not only increase the power management system, meaning the length of time that the actual fish swims, but also the durability of the actual product.”

The robot can typically swim for about nine hours before it runs out of power and must come back in, but Pieterkosky anticipated that it can spend up to 10 months in the water before maintenance is needed. The sensors it carries, though, may need to be cleaned more often.

In addition to the design, advances such as 3D printing and the advent of smaller, less power-hungry cameras and sensors make the underwater drone possible and the data it collects useful. The ability of the robot to swim alongside fish and be accepted by them allows for superior data acquisition, according to Thompson.

Aquaai envisions its robots being used to monitor fish for health and size, thereby allowing feeding to be optimized. The drones could also be used to inspect nets and other infrastructure, as well as carry out other tasks. This could be done following a pre-programmed sequence or through manual control.

As for Aquabyte’s solution, it is based, in part, on techniques originally developed to help self-driving cars navigate city streets and other environments. Aquabyte’s engineers then refined these and other technologies into something suitable for aquaculture.

“The heart of the algorithm is the computer vision software that we built. We are able to analyze the camera footage to automatically determine the fish’s size. We also have an algorithm that can determine the shape and weight of the fish,” said Aquabyte’s Shang. “We also have an optical detection system that can detect sea lice.”

This does not require the addition of special cameras, according to Shang. Instead, all that are needed are off-the-shelf cameras and existing feeding cameras. The software does most of the work required to turn raw images into data on fish size, health and more.

Humans, however, will remain an integral part of the solution through experts that tell the software when it is right and wrong on an ongoing basis, Shang noted. Keeping people in the loop ensures that algorithms are working correctly and continuously improving, without getting humans bogged down in the labor-intensive and error-prone task of manually looking at every frame captured by a camera.

It is not yet certain which of these approaches or others in development will work and be successful. It’s possible that they may be combined, since some could be complementary.

As for the future, Kvarøy’s Knutsen is already considering the next step in innovation. When asked what new monitoring capabilities and technologies would be useful, he mentioned sonar.

“Sonar would help see where the fish is in the pen at any time, and feeding could be adjusted accordingly,” he said.

Follow the Advocate on Twitter @GAA_Advocate

Author

-

Hank Hogan

Hank Hogan is a freelance writer based in Reno, Nevada, who covers science and technology. His work has appeared in publications ranging from Boy’s Life to New Scientist.

[109,111,99,46,110,97,103,111,104,107,110,97,104,64,107,110,97,104]

Tagged With

Related Posts

Innovation & Investment

Rise of the machines: Aquaculture’s robotic revolution

Technological advances are revolutionizing aquaculture. From airborne inspection tools to underwater drones, innovative robotics and automation technology are unveiling a brave new world of futuristic farming.

Innovation & Investment

Eight digital technologies disrupting aquaculture

Eight digital technologies are disrupting aquaculture and having a profound impact on the way business operates – even displacing some established ones.

Innovation & Investment

Maine scallop farmers get the hang of Japanese technique

Thanks in part to a unique “sister state” relationship that Maine shares with Aomori Prefecture, a scallop farming technique and related equipment developed in Japan are headed to the United States. Using the equipment could save growers time and money and could signal the birth of a new industry.

Innovation & Investment

Constant flow technology: Useful automation for aquaculture

Controlling the speed of a pump motor with a variable-frequency drive to maintain output is more efficient than controlling the pump’s flow with a valve.